Preparing VMware and Hardware Environment

VMware Considerations

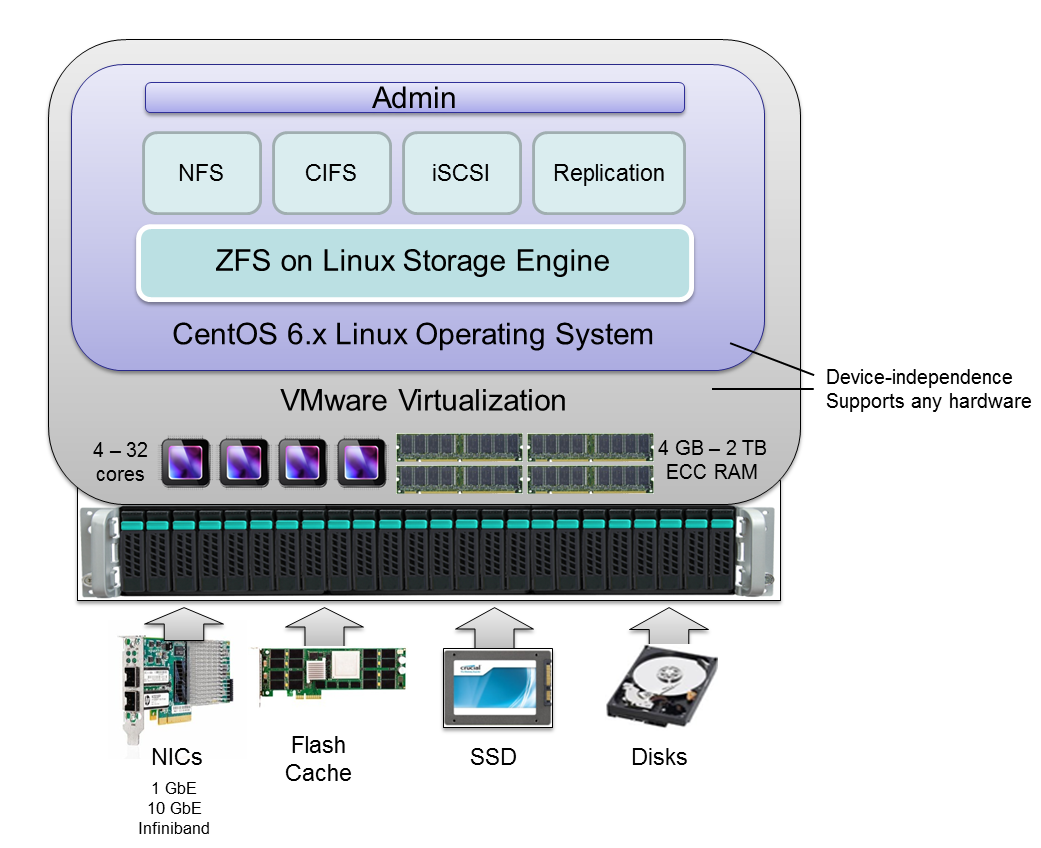

As shown below, SoftNAS™ operates within the VMware virtualization environment, typically on a VMware host that is dedicated as a NAS storage server. VMware virtualization provides the broadest range of device and resources, resulting in superior management and administration. And because SoftNAS runs within VMware, it inherits all the features and support that comes from VMware, including vCenter and other administration tools.

On the VMware host for SoftNAS, one or more SoftNAS virtual machines are deployed using standard VMware best practices. As shown below, from 4 to 32 (or more) cores and hyperthreads are allocated to the SoftNAS VM(s) for storage management. Large amounts of ECC memory (from 4 GB up to 2 TB) are allocated to the SoftNAS VM, providing large amounts of RAM cache, which dramatically increases read performance.

Any desired network topology can be supported via the VMware physical and virtual network switching layers - from 1 GbE to 10 GbE and Infiniband for high throughput. It's common to deploy 1 GbE ports for administration of VMware and SoftNAS, plus 10 GbE or Infiniband bonded ports for high-speed storage access. And since all networking flows through VMware, all vSwitch functionality, including load-balancing, VLANS, throttling and more are all available.

For extremely high-IOPS deployments (e.g., VDI, databases, etc.), a combination of RAM, Flash Cache PCIE memory cards and/or SSD's can be deployed for maximum performance.

The SoftNAS VM runs as an x64 version of the robust, secure and stable CentOS 6.x Linux operating system. Linux provides a broad range of standard services, including NFS, CIFS (Samba) and iSCSI for NAS client connectivity.

Virtualization adds a minor amount of overhead (less than 5%) versus bare metal operation. The additional CPU and memory available more than compensates for this nominal virtualization overhead. Of course, virtualization provides SoftNAS storage many of the same benefits that workload VM's enjoy by being virtualized (e.g., ease of administration and management, and unparalleled flexibility and device compatibility).

Note that a minimum of 4 vCPUs is required for proper operation. ZFS includes 256-bit block checksums, which consume some CPU. If you choose to use data compression and/or deduplication, additional CPU punch may also be in order.

The ZFS storage engine makes very effective use of RAM for caching. As RAM is relatively inexpensive, it is recommended to provide the SoftNAS VM with as much RAM as you can make available for optimal performance. Always use ECC RAM with SoftNAS, as you want to ensure there are no errors accidentally introduced into your data by memory read/write cycles (and ECC will detect and correct any such errors immediately).

Please review the VMware System Requirements for more details on recommended settings for the SoftNAS VM.

Networking Considerations

A minimum of 1 gigabit networking is required and will provide throughput up to 120 MB/sec (line speed of 1Gb/E). 10Gb/E offers 750+ MB/sec throughput. To reduce the overhead for intensive storage I/O workloads, it is highly-recommended to configure the VMware hosts running SoftNAS and the heavy I/O workloads with "jumbo frames", MTU 9000. It's usually best to allocate a separate vSwitch for storage with dual physical NICs with their VMkernels configured for MTU 9000 (be sure to configure the physical switch ports for MTU 9000, as well). If possible, isolating storage onto its own VLAN is also a best practice.

If you are using dual switches for redundancy (usually a good idea and best practice for HA configurations), be sure to configure your VMware host vSwitch for Active-Active operation and test switch port failover prior to placing SoftNAS into production (like you would with any other production VMware host).

You should choose static IPv4 addresses for SoftNAS. If you plan to assign storage to a separate VLAN (usually a good idea), ensure the vSwitch and physical switches are properly configured and available for use. For VMware-based storage systems, SoftNAS is typically deployed on an internal, private network. Access to the Internet from SoftNAS is required for certain features to work; e.g., Software Updates (which download updates from softnas.com site), NTP time synchronization (which can be used to keep the system clock accurate), etc.

From an administration perspective, you will probably want browser-based access from the internal network only. Optionally, you may wish to use SSH for remote shell access (optional). If you prefer to completely isolate access to SoftNAS from both internal and external users, then access will be restricted to the VMware console only (you can launch a local web browser on the graphical console's desktop). Note that you can add as many network interfaces to the SoftNAS VM as permitted by the VMware environment.

Prior to installation, allocate a static IP address for SoftNAS and be prepared to enter the usual network mask, default gateway and DNS details during network configuration. By default, SoftNAS is configured to initially boot in DHCP mode (but it is recommended to use a fixed, static IP address for production use).

At a minimum, SoftNAS must have at least one NIC assigned for management and storage. It is best practice to provide a separate NICs for management/administration, storage I/O and replication I/O.

Disk Device Considerations

The SoftNAS VM runs the Linux operating system, which boots from its own virtual hard disk (VMDK) on the local disk drive (host datastore).

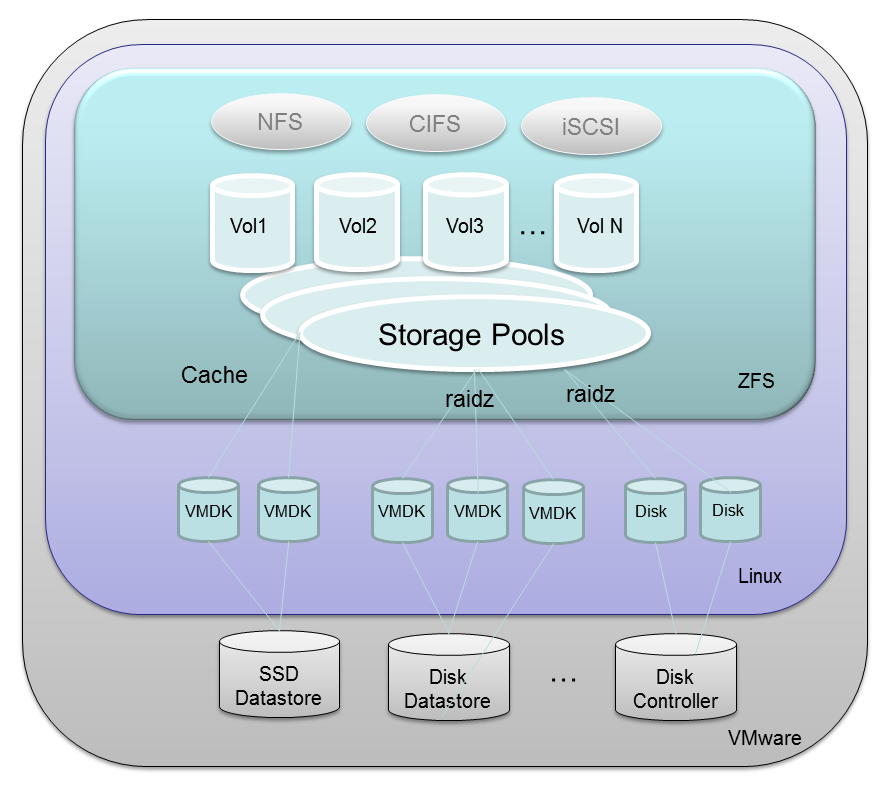

SoftNAS manages a collection of locally-attached storage devices, as shown below. Physical storage devices are typically managed by VMware and presented as VMware datastore (it is also possible to pass disk controller through VMware directly to Linux for direct-attached raw disks, although that configuration is more complex and less common).

VMDKs are used to attach disk storage to the SoftNAS Linux VM. SSD read and write cache devices are attached in a similar way. Note that a single SoftNAS VM can be deployed for dedicated applications or multiple SoftNAS VM's can be deployed for service provider configurations, where different customers receive their own separate SoftNAS virtual storage server.

VMDK's can be arranged into raid configurations within SoftNAS to form RAID-1/10 mirrors or RAIDZ-1 to RAIDZ-3 configurations, which provide additional data protection features.

One or more VMDK's can be combined to create a "Storage Pool". Each storage pool provides an expandable aggregate of storage that can be shared by one or more Volumes. Volumes are then exported as NFS, shared as Windows CIFS shares or made available as iSCSI target LUNs.

Finally, when configuring the SoftNAS VM, for highest throughput it is recommended to change the SCSI disk type from "LSI Logic" to "Paravirtual", which provides the best disk I/O performance characteristics.

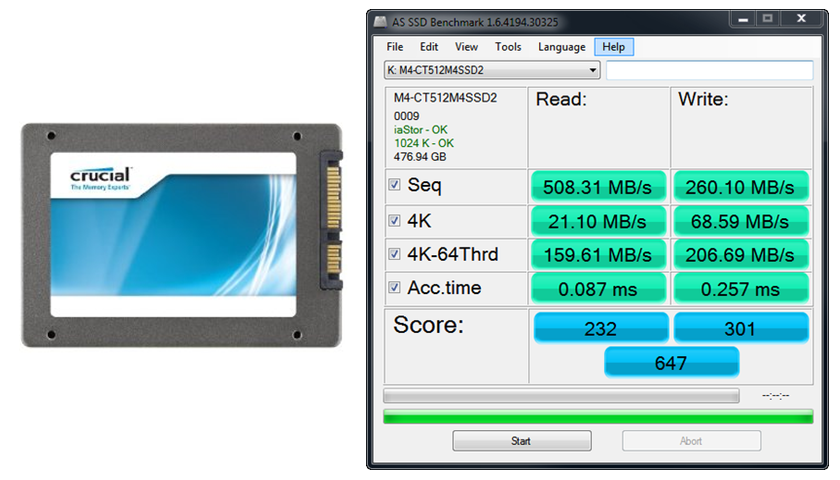

SSD (solid state disks) drives - SoftNAS supports the use of high-speed SSD drives, including the latest, affordable MLC drives comprised of flash NAND memory, which typically provide access times 100 times faster than physical disk drives (e.g., access times in the 0.1 millisecond range are common, with 300 MB/sec to 450 MB/sec transfer rate per drive). This equates to 40,000 to 50,000 or more IOPS (I/O per second).

SSD are excellent for use as both Read Cache and Write Log purposes, augmenting main memory with additional high-performance caching and logging storage. Note that write log devices can be configured as RAID 1 mirrors, so you have double protection against drive failures and data loss.

Some recommended drives include Crucial M4 series SSD, which are widely available and very affordable. The Crucial M4 512 GB drive shown below costs a few hundred dollars and provides very impressive throughput and extremely fast read access, and respectable write speed as well. These drives are now less than $1 per GB and are as much as 100 times faster than SAS drives. However, there is a short life expectancy for these drives - est. 3 to 5 years, depending upon how much write activity your workloads exhibit. If you have high-write workloads, SAS drives may be a better choice for long term high-performance storage. If you have read-intensive workloads, it's hard to beat SSD for high-performance and durability, especially at the price. Of course, SSD are an excellent choice for read cache - a use case for which they're hard to beat.

Lastly, SSD consume about 1/10th the power of spindle drives like SAS and SATA disks, so if low-power operation is an objective, SSD are a great solution.

10K and 15K SAS drives - SAS drives have long provided a solid foundation for storage systems. Assuming budgets permit, it is recommended to use 15K SAS drives for high-performance workloads, such as SQL Server databases, virtual desktop systems like RDS, VMware View, Citrix XenDesktop, Exchange Server and other performance-sensitive applications. As always, use of RAID 10 provides best read and write performance, while RAID 5/6/7 provide excellent read performance with some write performance penalty. Adding SSD as read cache and write log can greatly improve the performance of small writes and brief I/O bursts.

The Seagate Cheetah 15K SAS drive shown below, for example, provides high-perfomance with proven reliability for long-term reliability.

SATA drives- modern SATA drives provide access to high capacity storage at relatively low performance levels. SATA storage is typically adequate for file servers and user data, backup data storage and many common applications that do not require high levels of performance. For best results, do not use SATA drives for high-performance workloads.

High-capacity SATA drives like the Seagate Barracudo7200 3 TB drive shown below provide an enormous amount of storage when aggregated into a RAID array, and are very affordable at a hundred to two hundred dollars each.

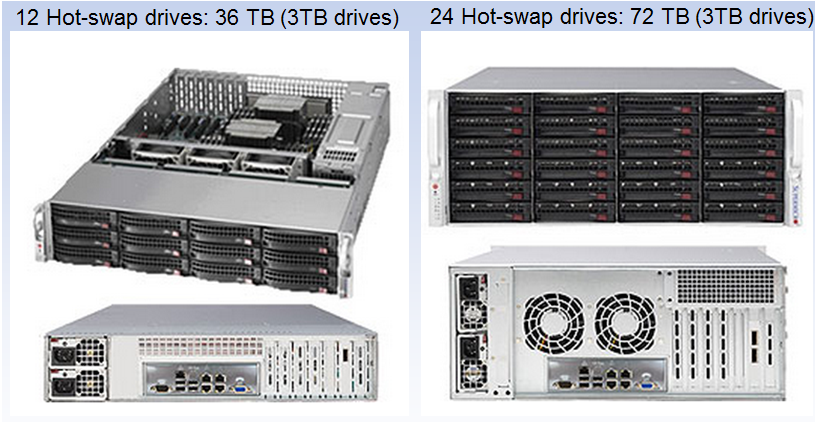

Storage Enclosures

There are lots of options for storage enclosures, including chassis with redundant power supplies, redundant cabling in both 3.5" and 2.5" form factors.

You may also leverage JBOD arrays with SoftNAS.

After you have chosen which of the above methods of connecting JBOD (just a bunch of disks) to VMware, then you are ready to install and configure SoftNAS. If you are just giving SoftNAS a try on a smaller-scale basis, then most any VMware-compatible disk storage will suffice as a starting point. Just remember, you don't need to spend a lot to get high-performance, high-quality NAS capabilities with SoftNAS, as there's enormous flexibility and choice available due to the broad compatibility provided by VMware and Linux.

Please review RAID Considerations for additional information on available and recommended data disk configurations.

Note: After the installation of SoftNAS, if needed, you can update the VMware Tools that ship with SoftNAS to ensure you have the latest version of VMware Tools installed for your VMware system. This will ensure you can gracefully shut down and reboot SoftNAS from the VMware vSphere or vCenter console (as with any other VM) and that you have the latest vmxnet3 drivers (required for optimal 10 GbE throughput).