RAID Considerations

RAID Considerations

SoftNAS provides a robust set of software RAID capabilities, including RAID 1 and RAID 10 mirrors, RAID 5 (single parity), RAID 6 (dual parity) and even RAID 7 (triple parity) support. It also includes hot spare drive capabilities and the ability to hot-swap spares into operation to replace a failed drive.

RAID 10 (striped mirrors) and RAID 6 (dual-parity) are generally recommended for the best balance of read/write I/O performance and fault tolerance. Use RAID 10 for the most performance-sensitive storage pools (e.g., SQL Server, Virtual Desktop Server) and RAID 6 for high-capacity, high-performance applications (e.g., Exchange Server) as it provides the highest write IOPS.

SoftNAS is built atop the ZFS filesystem. Please take a few moments to become familiar with ZFS Best Practices for more details on storage pool, RAID and other performance, data integrity and reliability considerations.

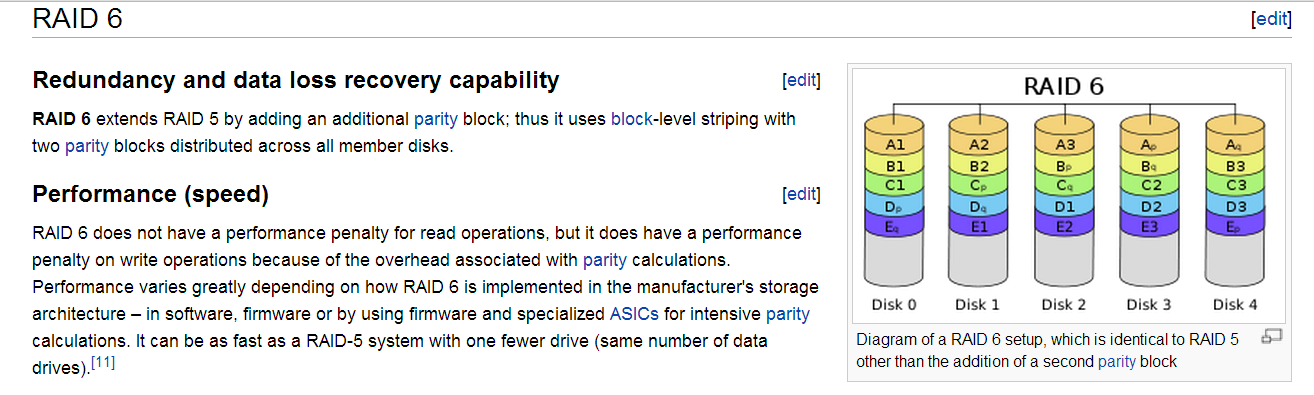

“RAID 6 - Wikipedia" <http://en.wikipedia.org/wiki/Standard_RAID_levels#RAID_6>

“RAID 0, RAID 1, RAID 5, RAID 10 Explained with Diagrams", August 10, 2010 by Ramesh Natarajan <http://www.thegeekstuff.com/2010/08/raid-levels-tutorial/>

The following key points should be considered for RAID level 10:

-

Minimum 4 disks.

-

This is also called as “stripe of mirrors”

-

Excellent redundancy ( as blocks are mirrored )

-

Excellent performance ( as blocks are striped )

-

If you can afford the dollar, RAID 10 is the BEST option for any mission critical applications (especially databases).

Please take a few moments to become familiar with ZFS Best Practices for more details on storage pool, RAID and other performance, data integrity and reliability considerations.

Hardware Controllers RAID and JBOD

Before we get into configurations, when you choose a disk controller, if it includes write caching, be sure it also includes a built-in battery backup that will hold onto any cached writes in the event of a power failure. This will increase the system's resiliency to failure.

SoftNAS can leverage hardware RAID arrays, where the disk RAID process is managed by the underlying disk controller. Hardware RAID may be available at the disk controller level, which can provide higher performing RAID 5 and RAID 6 configurations, as the better controllers are able to coalesce multiple writes and optimize disk arm write motion. When hardware RAID is used with SoftNAS, RAID administration takes place outside of SoftNAS.

When you choose to use hardware RAID, you can also use software RAID to increase redundancy, performance and recoverability. If you plan to store long-term data for more than a few years, it is highly recommended to use the ZFS software RAID functionality (even if you also use hardware RAID) for your VMDK's. This is because ZFS will detect and correct "bit rot" and other errors that creep into storage media over time; otherwise, you could end up with corrupted files over the long haul; e.g., after 4 to 5 years, less if you use inexpensive disks that are more prone to developing long-term storage accuracy errors.

For example, let's say you have twenty 600 GB SAS drives. One way to configure storage would be:

-

Create two hardware RAID 6 arrays, plus two spares. Each array consists of 8 drives - 6 data + 2 parity.

-

Each hardware RAID array becomes a VMware datastore with approximately 3.3 TB of usable storage (two 3.3 TB datastores)

Next, the question is, how might one allocate the space within SoftNAS.

For maximum storage capacity and resiliency, you could create six 1.1 TB VMDK's from the two datastores and attach them to the SoftNAS VM. This results in six virtual disks of 1.1 TB each becoming available within SoftNAS.

Then, inside SoftNAS you could add all six virtual disks to a single, large storage pool using RAID 5 (single parity). The advantage of using both hardware RAID 6 is the arrays can tolerate up to two concurrent drive failures on each array. The software RAID 5 then provides an added layer of parity and recoverability.

You can also effectively disable software RAID within SoftNAS by specifying RAID 0 (no redundancy, with striping) and assign all six 1.1 TB virtual disks to a storage pool. Please note that if hardware RAID is used, combined with RAID 0 in SoftNAS, the built-in ZFS filesystem will be unable to recover data, as it will have no parity information to work with. As a result, as with any hardware RAID system, your data integrity is reliant upon the hardware level RAID array and its integrity alone.

Alternatively, you could place the hardware controller into "JBOD mode" and just pass each disk through to SoftNAS directly as raw storage (no hardware RAID), and use the software RAID within SoftNAS to handle RAID.

It is important to give careful consideration to which type of RAID configuration will be used to provide the right balance of performance and resilience for your applications.

General Recommendations on Design of Storage Pools

The following recommendations apply to ZFS storage pools created on SoftNAS:

- For raidz1, do not use less than 3 disks, nor more than 7 disks in each vdev

- For raidz2, do not use less than 5 disks, nor more than 10 disks in each vdev

- For raidz3, do not use less than 7 disks, nor more than 15 disks in each vdev

- Do not use raidz1 for disks 1TB or greater in size (use RAIDZ2/3 or mirroring instead for better protection)

- Mirrors trump raidz every time. Far higher IOPS result from a RAID10 mirror pool than any raidz pool, given equal number of drives. This is especially true when using raw disks in situations where you need high write IOPS (typical of VM workloads)

- For 3TB+ size disks, 3-way mirrors begin to become more and more compelling

- Never mix disk sizes (within a few %) or speeds (RPM) within a single vdev

- Never mix disk sizes (within a few %) or speeds (RPM) within a zpool, except for l2arc & zil devices

- Never mix redundancy types for data vdevs in a zpool (use all RAID10 mirors, RAIDZ2, etc. instead of mixing redundancy types)

- Never mix disk counts on data vdevs within a zpool (if the first data vdev is 6 disks, all data vdevs should be 6 disks)

- If you have multiple JBOD's, try to spread each vdev out so that the minimum number of disks are in each JBOD. If you do this with enough JBOD's for your chosen redundancy level, you can even end up with no SPOF (Single Point of Failure) in the form of JBOD, and if the JBOD's themselves are spread out amongst sufficient HBA's, you can even remove HBA's as a SPOF.

- Use raidz2/3 over raidz1 plus a hot spare, because increased redundancy provides better data protection (and raidz3 is like having online hot spares, since it can sustain 2 drive failures).

Virtual Devices and IOPS - As SoftNAS is built atop of ZFS, IOPS (I/O per second) are mostly a factor of the number of virtual devices (vdevs) in a zpool. They are not a factor of the raw number of disks in the zpool. This is probably the single most important thing to realize and understand, and is commonly not. A vdev is a “virtual device”. A Virtual Device is a single device/partition that act as a source for storage on which a pool can be created. For example, in VMware, each vdev can be a VMDK or raw disk device assigned to the SoftNAS VM.

A multi-device or multi-partition vdev can be in one of the following shapes:

Stripe (technically, each chunk of a stripe is its own vdev)

- Mirror

- RaidZ

- A dynamic stripe of multiple mirror and/or RaidZ child vdevs

ZFS stripes writes across vdevs (not individual disks). A vdev is typically IOPS bound to the speed of the slowest disk within it. So if you have one vdev of 100 disks, your zpool's raw IOPS potential is effectively only a single disk, not 100. There's a couple of caveats on here (such as the difference between write and read IOPS, etc), but if you just put as a rule of thumb in your head that a zpool's raw IOPS potential is equivalent to the single slowest disk in each vdev in the zpool, you won't end up surprised or disappointed.

About Read Caching

SSD’s can be used for both read cache (L2ARC) and write log cache (ZIL). These are applied at the storage pool level, and so the cache is shared across volumes.

Read caching begins with RAM, which is the fastest first level cache. SoftNAS and the operating system take up about 1 GB of RAM, so the balance is available for use as cache memory; e.g., 16 GB VM, 15 GB cache max.

When the RAM cache is full, if there is a level 2 cache configured for the storage pool (e.g., SSD configured as L2ARC), then the 2nd level read cache will begin to fill up. The more frequently accessed blocks will end up in RAM cache. If the RAM cache device were to fail for any reason, there is no risk of data loss, just a loss of performance until it is replaced.

About Write Logs

Attaching a high-speed SSD write log to a storage pool is a great way to increase the system's ability to accept large bursts of incoming writes and small writes, as these requests are effectively cached within the write log.

The write log effectively becomes an extension of the storage pool, and is an essential part of the pool and its integrity. For that reason, it is important to ensure write log devices are mirrored pairs (or a hardware RAID array), in case one of the devices fails, the pool is not impacted. The write log cache quickly accepts incoming write requests, allowing the request to be immediately completed for faster writes. Periodically, the cached writes are written to the main storage pool spindles.

If you use hardware RAID 1 SSD's (a configuration we use with customers), this will create a single datastore (e.g., in VMware). Next, attach two virtual disks (VMDK) to the SoftNAS VM, and then create a RAID 1 mirror for the two VMDK's. This will provide you with the highest possible integrity for the write log.

When you choose a disk controller, if it includes write caching, be sure it also includes a built-in battery backup that will hold onto any cached writes in the event of a power failure. This will increase the system's resiliency to failure.

Sharing Read Cache and Write Log Devices

If running under VMware (for example), it is possible to share SSD devices across multiple storage pools. For example, we have created a RAID 5 array of SSD’s (e.g., 7 x 128 GB) using hardware RAID, then presented that array to VMware so that it becomes a read cache datastore. Then, that SSD read cache datastore can have portions assigned to each storage pool.

Of course, the raw SSD devices can also be passed directly through to the SoftNAS VM, as well. For write logs, we typically prefer mirrored pairs, but a hardware RAID 5 array is als okay, as it provides reasonable redundancy. It should be possible to use VM thin-provisioning to share the RAID 5 cache across less critical storage pools, or you can thick-provision chunks of cache to ensure certain pools receive dedicated read cache assignments.

About UPS

You should always have UPS and/or backup power for your business critical storage systems. In the event of a power failure that will exhaust the available UPS battery time, you can typically configure most UPS devices to integrate with an application that will perform a graceful power down operation for your servers. Power off the workload VM's which use storage first, then power down SoftNAS last in the graceful shutdown sequence.

About Backups

RAID and NAS snapshots should not be your only means of backup. Be certain that your critical data is always backed up using best practices for data backup and archival, so you are certain to have a complete copy of your data available for a deep recovery, should it ever be required. SoftNAS greatly reduces the likelihood of such a recovery being required, but when it comes to your data, there's never a substitute for having a reliable data backup system in place to protect your business and its data fully.